Recovering an old Docker registry

The background

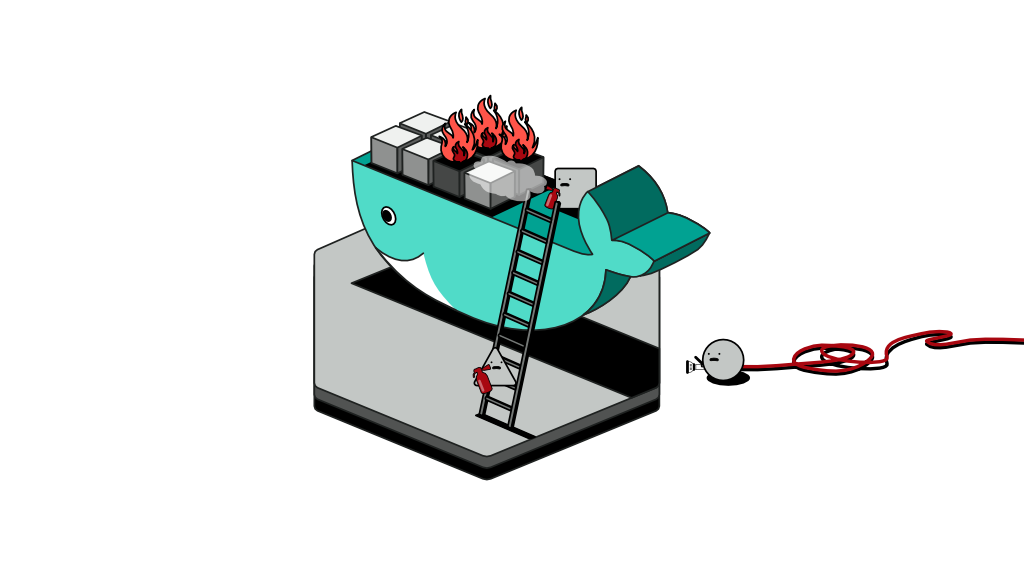

I once ran into an issue where I had to shut down some VMs on our cloud account due to a corporate restructuring exercise. Things happened too sudden and quickly that we never had the opportunity to back anything up. Almost instantly, we had to log in to the cloud console and stop all instances.

As you’d imagine, by shutting down the servers, we achieved our main goal of suspending the service and cutting down cost. However, we lost all our configurations, backup files, and data - including docker images that were build through a complex CI/CD process.

Later on, I was contracted to revive the application and restore it to its last stable state. Surely it is going to be tedious to rebuild the docker containers, and based on my estimations, it would take at least a week's worth of work to rebuild the containers given that I tend to automate repetitive things on the fly.

In my case, thankfully the internal node of the cluster that was shut down had not expired and I could start it. This particular VM was the node running a local docker registry and all its files are stored on the host path itself.

In this article I’m going to demonstrate the steps I took to retrieve the containers of our application so I can revive it quickly without having to rebuild.

The problem

We used to a run a bare metal Kubernetes cluster with multiple nodes. The cluster had a single master node and a single internal-purposes node aside from all the other worker nodes. A private docker registry was running in that internal node with a host path folder mounted onto the registry pod.

As I returned to the cloud console, I realised the master node had already expired and was no longer available for me to renew. In fact, all other nodes were gone except that particular internal node. With the master node gone, we were unable to restore the ingress controller that was directing traffic through a domain name into the private registry. The registry was simply not accessible.

The solution

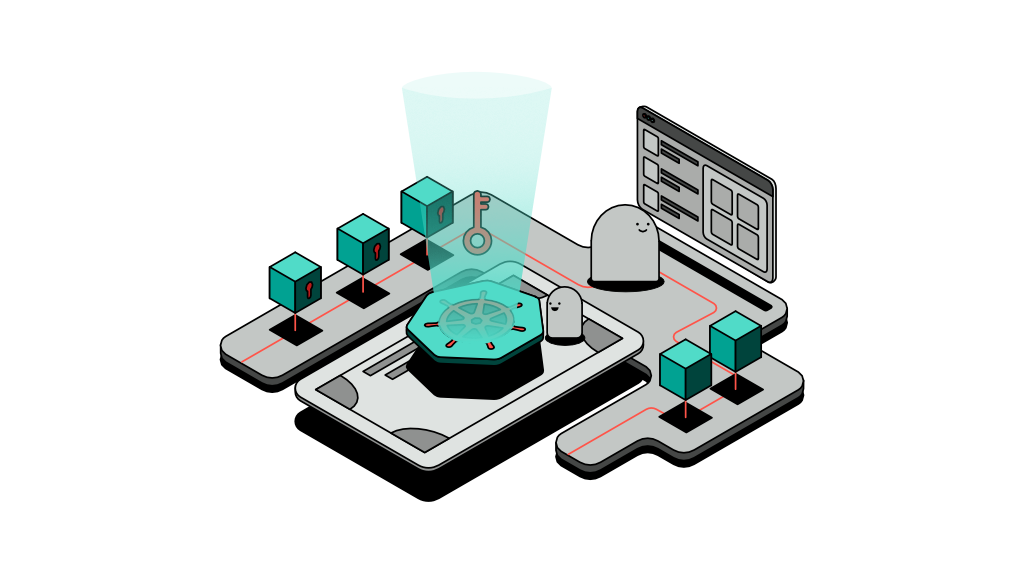

The solution was simple enough yet it took some tinkering to achieve a simple and straightforward method. The solution was to rely solely on the host’s docker and forget about Kubernetes.

I logged in to the server and made sure all mounted volumes were intact. Next, I copied those files somewhere locally for safe keeping. In fact, I even made a backup copy on the server itself. I do this as a safety measure in case I need to revert while experimenting with the registry.

At this point, waking the original registry image that was running in the Kubernetes cluster would only cause you to run into a tonne of issues since the network (master) layer is gone. If you manage to do so, it would have taken some tinkering. Instead, I created a brand new registry and mounted the backup folder that I created onto it. The trick is in navigating your way around it, so let's begin.

The steps

Step 1: Run a new registry container and configure it to point to the mount folder used in the previous configuration. For this, I followed Docker's official documentation.

$ docker run -p 5000:5000 --restart=always --name registry -v /mnt/registry_data_backup:/var/lib/registry registry:2Notice how I pointed the volume to the backup folder instead of the original. I did this to ensure the original files remain intact in case the image overrides any configuration files in the folder. You can let Docker run in a terminal and start a new window, ssh to the server again. By now, you should have a brand new Docker registry available and running on port 5000.

It’s now possible to access images that existed in the old registry, but the seemingly obvious attempt of pulling an image directly would result in an error as below. I didn't dig too much into this but I suppose the original manifest file signature did not match the new container so it wasn't able to read it.

$ docker pull localhost:5000/app-backend-serviceOUTPUT

Using default tag: latest

Error response from daemon: manifest for localhost:5000/app-backend-service:latest not found: manifest unknown: manifest unknownInstead, you would need a way to list the exact tags available in order to bypass that manifest unknown error.

Step 2: To list available tags, you first need to list available images and we can do so using the registry’s web APIs as follows:

$ curl localhost:5000/v2/_catalogOUTPUT

{"repositories":["docker-registry-ui","app-ui-image","app-backend-service"]}Step 3: Now you can list the tags for the images you would like to copy.

$ curl localhost:5000/v2/app-backend-service/tags/listOUTPUT

{"name":"app-backend-service","tags":["1.0.3","1.0.4","1.1.0"]}Step 4: You can now pull a specific image from the registry into the local Docker on the VM.

$ docker pull localhost:5000/app-backend-service:1.0.3Step 5: Tag the local image you just pulled with a new path to push to.

$ docker tag localhost:5000/app-backend-service:1.0.3 new-registry-example.com/app-backend-service:1.0.3Step 6: Push the image to the new registry.

$ docker push new-registry-example.com/app-backend-service:1.0.3You may repeat the steps above to copy all other images you had in the old registry.

Conclusion

In this article, we’ve discovered a way to recover an old Docker registry which was running on a Kubernetes cluster that was destroyed. Attempting to recover the exact pod or image that Kubernetes was running for the registry will not be successful due to the absence of the Kubernetes networking layer, in which case the master node.

A hacky approach is to build a new registry that mounts the same folder as the old one, if not a copy of it. Using a new registry that runs locally using only Docker allows us to access the images stored in it; but there is a trick in navigating the new registry that is to bypass its web APIs.

Once the exact tags to copy are found, pulling, tagging and pushing the Docker images into a new registry is a straightforward process that you are most likely familiar with.